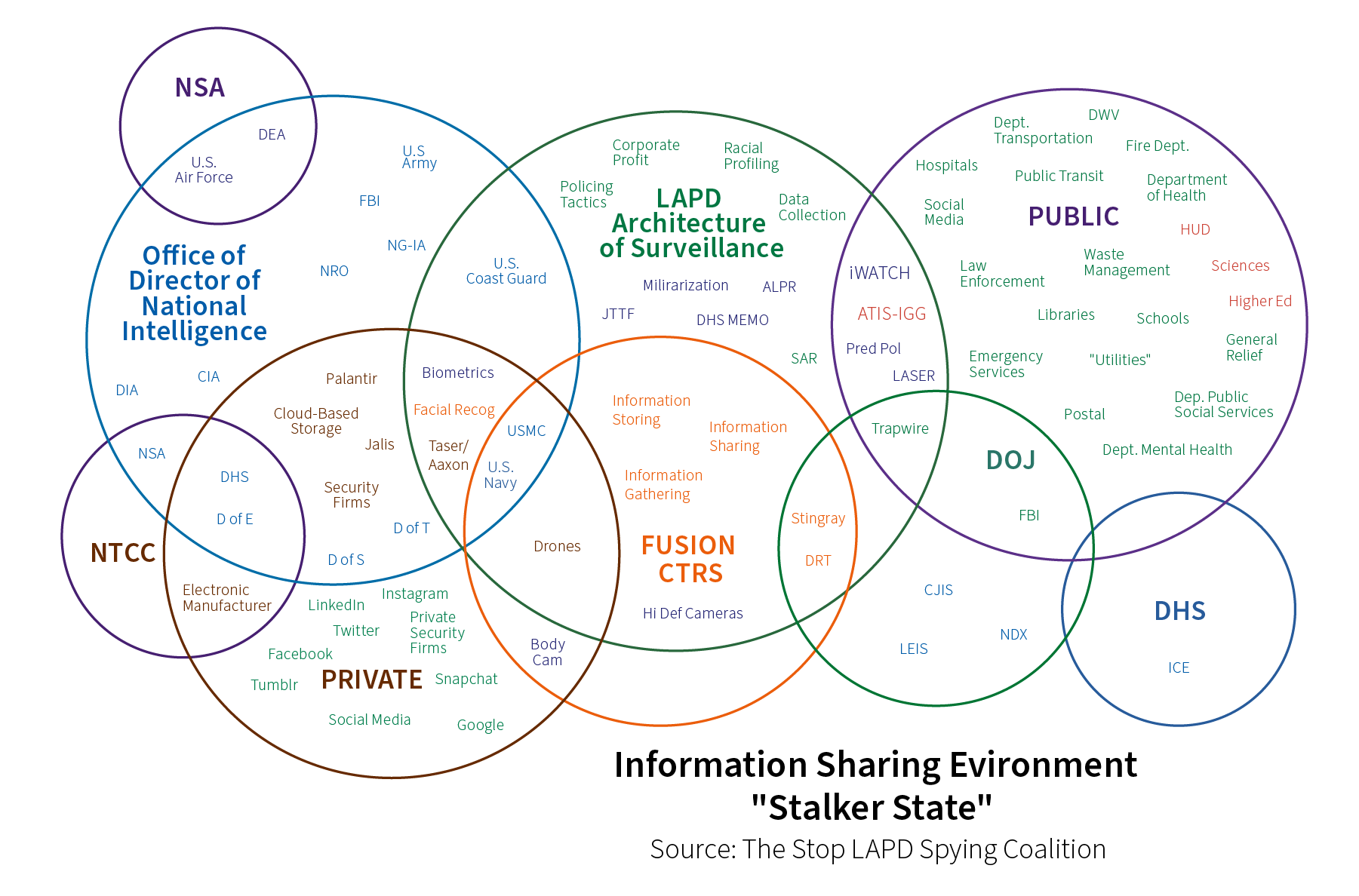

Social media companies are letting police, federal agencies, and third-party tech companies surveil QT2SBIPOC communities and organizers via their platforms. Access to mainstream social media platforms is often severely limited for Black, trans, and sex worker communities because of the ways in which these identities are policed and restricted by algorithms that are inherently racist, transphobic, and whorephobic. For example, as a result of content moderation practices, sex workers report difficulty finding each other on social media,

Black femmes and people who are coded as sex workers, are banned from Instagram at a higher rate,

real name policies prevent sex workers and trans folks from using platforms. Community members are forced to decide if they will risk use of and reliance on specific platforms, which includes facing the risk of removal by the platform moderators who may delete accounts without reason. These removals not only disrupt economic opportunities and community connection, they also make community building, organizing and mutual aid more difficult.

“

We see corporations like Facebook acting as arms of surveillance and providing all kinds of data or opportunities for law enforcement and corporations to capture data and use it in punitive ways. The way in which surveillance impacts people whom I call the criminalized population...Black, brown, LGBTQIA, native, people of color broadly...for those people, it’s not about somebody snooping in your email or eavesdropping on your phone calls. It’s really about blocking you from employment opportunities, blocking you from education, blocking you from housing, blocking you from travel. It’s a whole range of ways in which it directly impacts your life when all this data is weaponized to be used against you.

“

—researcher & educator

Platform moderation, or the policing of a platform’s content, is a critical site where the criminalization of sex work intersects with threats to internet autonomy. In 2018, two congressional bills were signed into law: the Fight Online Sex Trafficking Act (FOSTA) and the Stop Enabling Sex Traffickers Act (SESTA). Together known as FOSTA-SESTA, they make platforms liable for sex work related content and further criminalize sex workers.

FOSTA-SESTA was the first substantive amendment to Section 230 of the 1996 Communications Decency Act, which protected internet platforms from liability for the content users produce and post to their platforms. Many technology experts argue that Section 230 allowed for the growth of the free and open web that we use today.

But FOSTA-SESTA overrides Section 230’s “safe harbor” clause by increasing platform liability, in effect imposing broad internet censorship and a chilling effect.

“

The passage of SESTA and FOSTA has shutdown spaces for people to do work digitally. It’s impacted people very negatively, here in DC specifically. The people who’ve been most impacted are trans women of color, Black trans women, because now more and more people have to do street work, which is more dangerous in a lot of ways, including because police are out here. It’s easier for people to be arrested and go into the criminal legal system.

“

— developer & digital security educator

FOSTA-SESTA further polices sex work online and exacerbates existing platform policies and practices that censor online sex work and suppress digital organizing efforts, such as shadowbanning , content moderation, and deplatforming .

This means that they do not have the same access to the tools non-sex working folks use to build business and to organize.

Like many entrepreneurial businesses, many sex workers rely on an online presence, marketing, and creating their own online content to conduct business, and FOSTA-SESTA threatens to eliminate this capacity.

FOSTA-SESTA also harms the freedom of movement and economic opportunity for migrant sex workers. With the Department of Homeland Security and the FBI raiding adult services’ ad platforms and seizing servers containing user IDs and personal data, migrant sex workers, especially those who are of undocumented status, are unable to find work and live in fear that the government will use this data to track, arrest, and deport them.

“

I was very shadow banned, so I wasn’t showing up in searches. We did a lot of organizing around this hashtag, #LetUsSurvive. Looking at the statistics for this hashtag, though the numbers are there, it does not show up as trending

“

— sex worker rights activist

FOSTA-SESTA limits the resources that movement organizers need to combat harmful legislation, as many organizers fund their unpaid labor with money earned from sex work. In Hacking//Hustling’s new report on content moderation in sex worker and activist communities in the wake of the 2020 mobilizations in support of Black Lives, they found that individuals who engaged in both sex work and activism work experienced significantly more negative effects of platform policing than individuals who did either just sex work or just activism work. This suggests that there is a compounding effect where platforms more harshly police, censor, and deplatform activists who support their organizing work through sex work.